I built the Reddit Scraper to give you unlimited real-world content ideas and warm leads without running ads. This guide walks you through setting up the sheet, configuring the tool, running scrapes, and using the outputs to create content, do market research, and find outreach opportunities.

Table of Contents

What the Reddit Scraper does

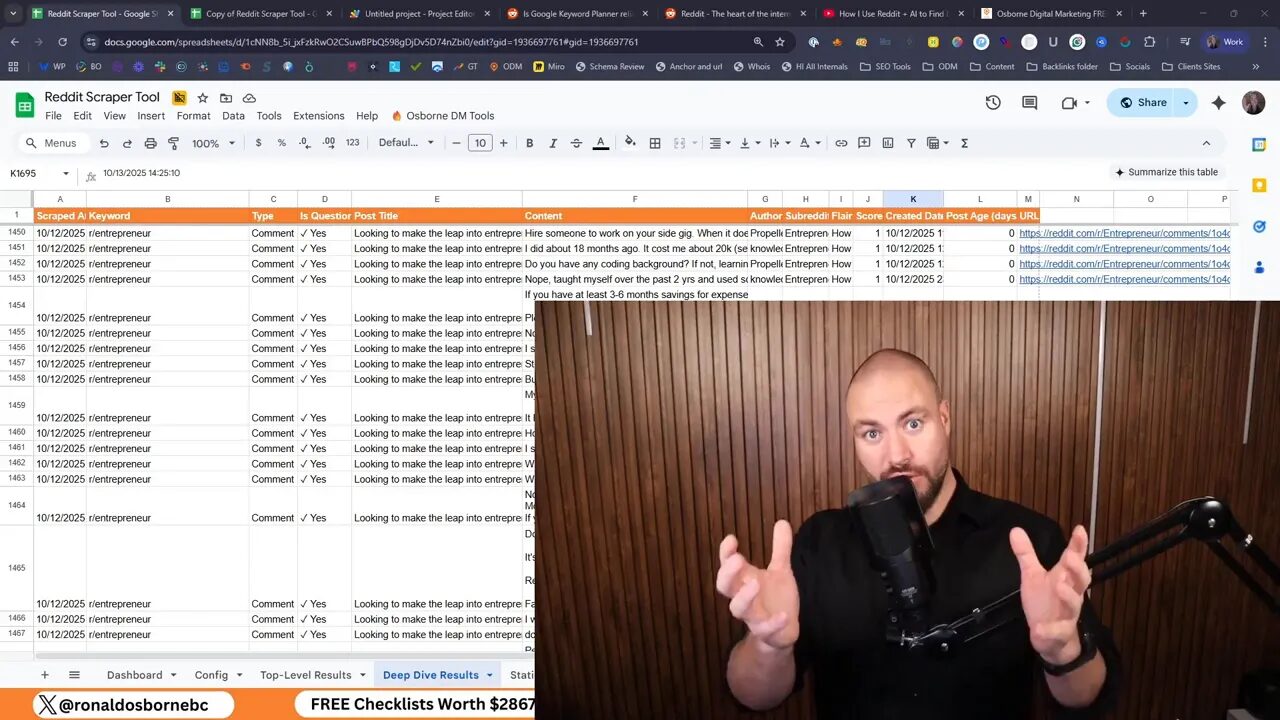

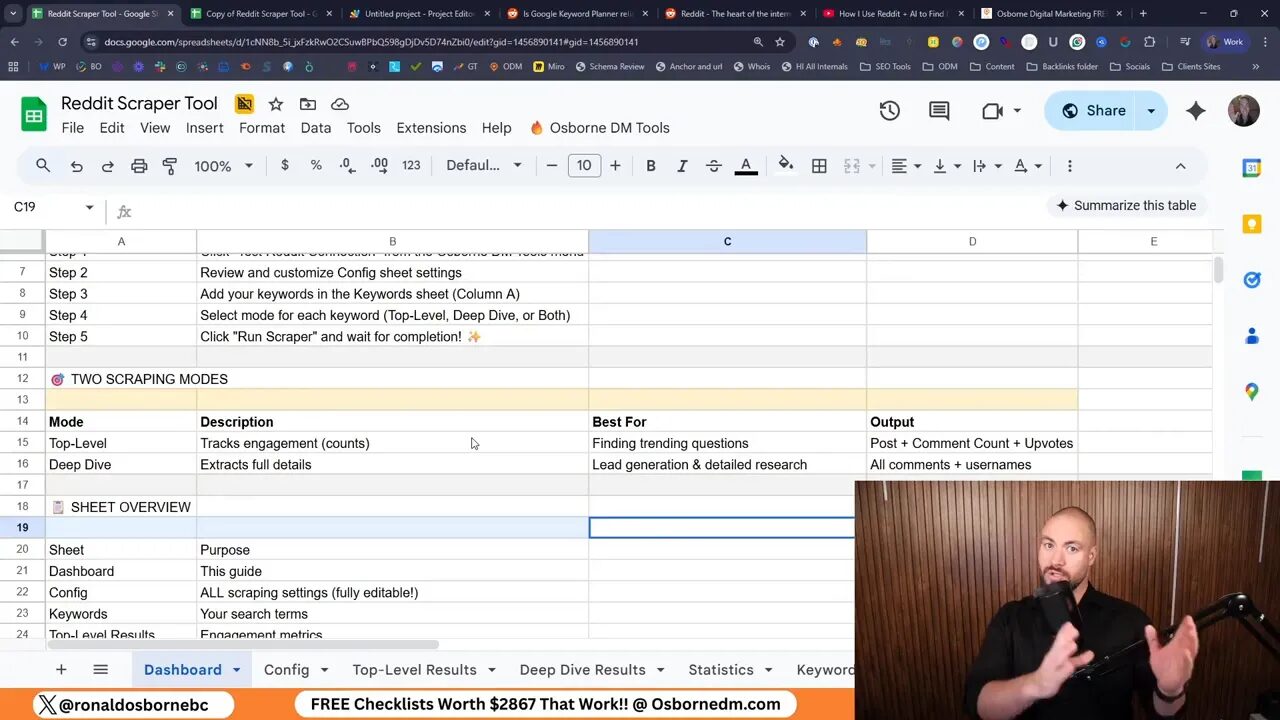

The Reddit Scraper connects Google Sheets to the official Reddit API to pull structured data from subreddits or keyword searches. It runs in two modes so you can do both quick scans and deep conversation retrieval:

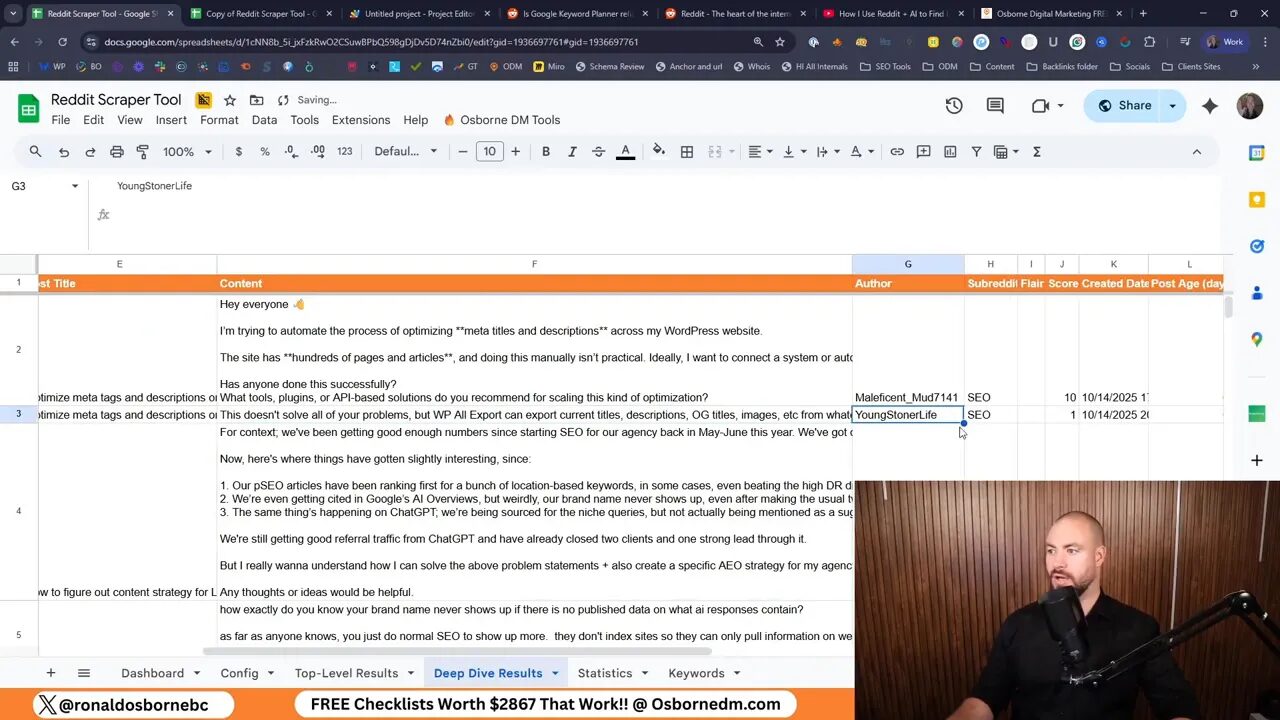

- Mode 1 - Top-Level Analysis: pulls post title, author, subreddit, flair, upvotes, comment count, post age, question detection flag, and a calculated engagement score.

- Mode 2 - Deep Dive: pulls full post text and comments, including comment author, score, and timestamp so you can analyze conversations and capture user data for outreach.

Why I built the Reddit Scraper

Every day your customers ask questions on Reddit and your competitors are often too slow to answer. The Reddit Scraper finds those questions, scores engagement so you can prioritize, and extracts comment threads so you can research or reach out to active users. It is free, runs entirely in Google Sheets, and uses the official Reddit API so the data is clean and reliable.

Quick start: get the sheet and API set up

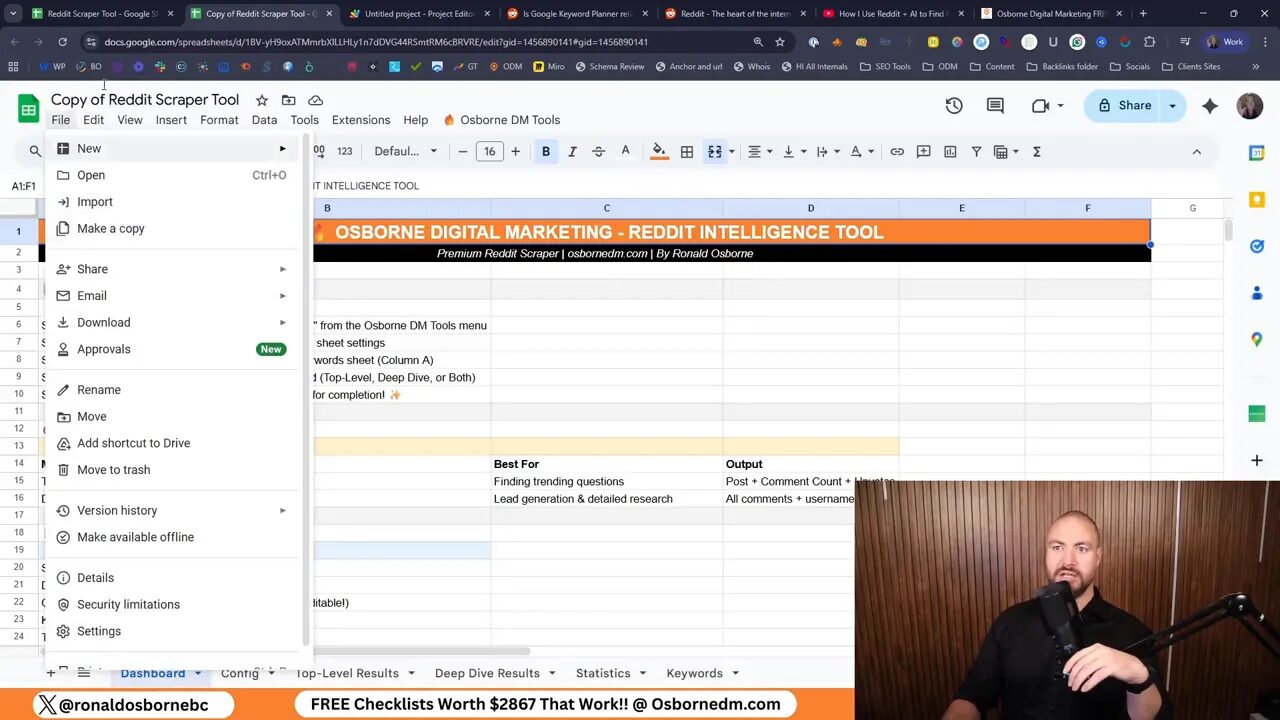

- Go to the download link and make a copy of the Google Sheet to your Drive.

- Create Reddit API credentials at reddit.com/prefs/apps and note your Client ID, Secret, and User Agent.

- Open the sheet and paste the credentials into the script as instructed.

- Run the setup menu under Osborne DM Tools and choose "Setup All Sheets".

- Run "Test Reddit Connection" to confirm authentication.

How to configure the Reddit Scraper

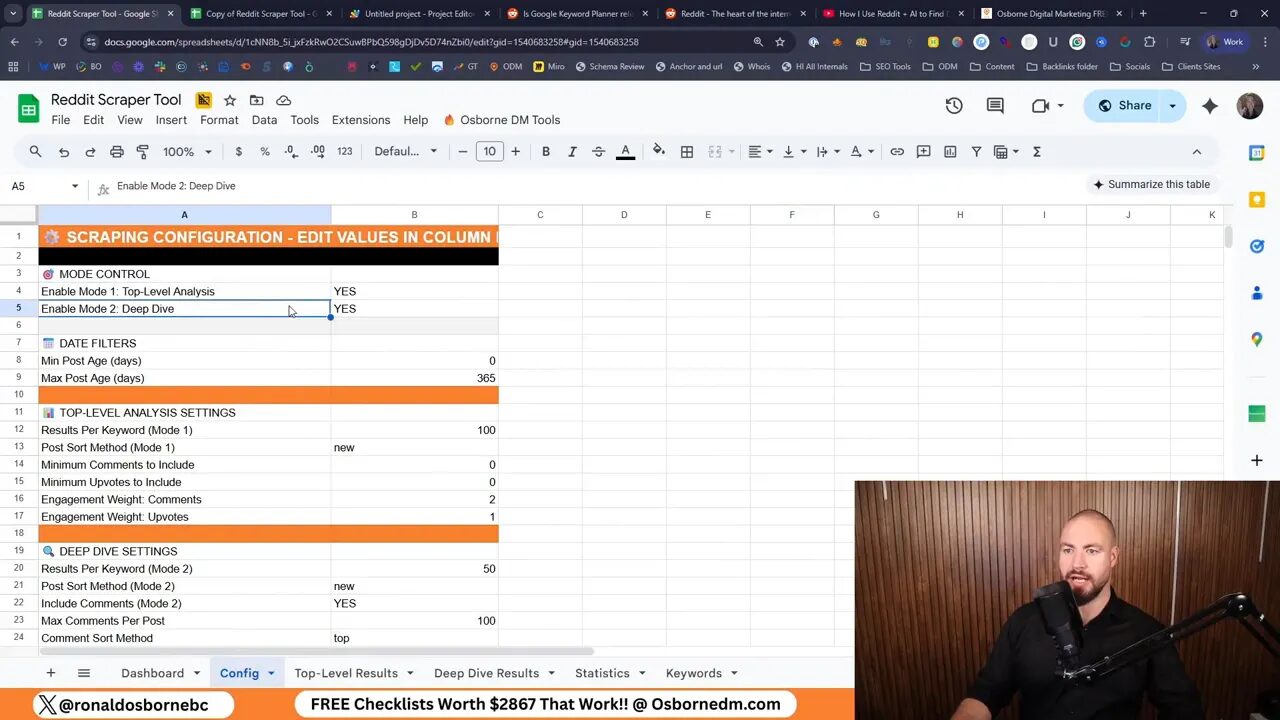

Open the Config tab and choose which mode to enable. I recommend keeping one mode on at a time for clarity. Key settings to adjust:

- Post age limit (for example, 365 days).

- Results per keyword (top-level can go up to 100; deep dive should be lower because it pulls more data).

- Sort method: new, hot, top, etc. Use new to capture fresh discussions.

- Minimum comments and minimum upvotes to filter noise.

- Include comments and max comments per post (I set 100 as a sensible default).

- Question detection options: detect question marks, question words, or only posts flaired as questions.

- Delay between API calls to avoid hitting Reddit rate limits.

Adding targets: subreddits and keywords

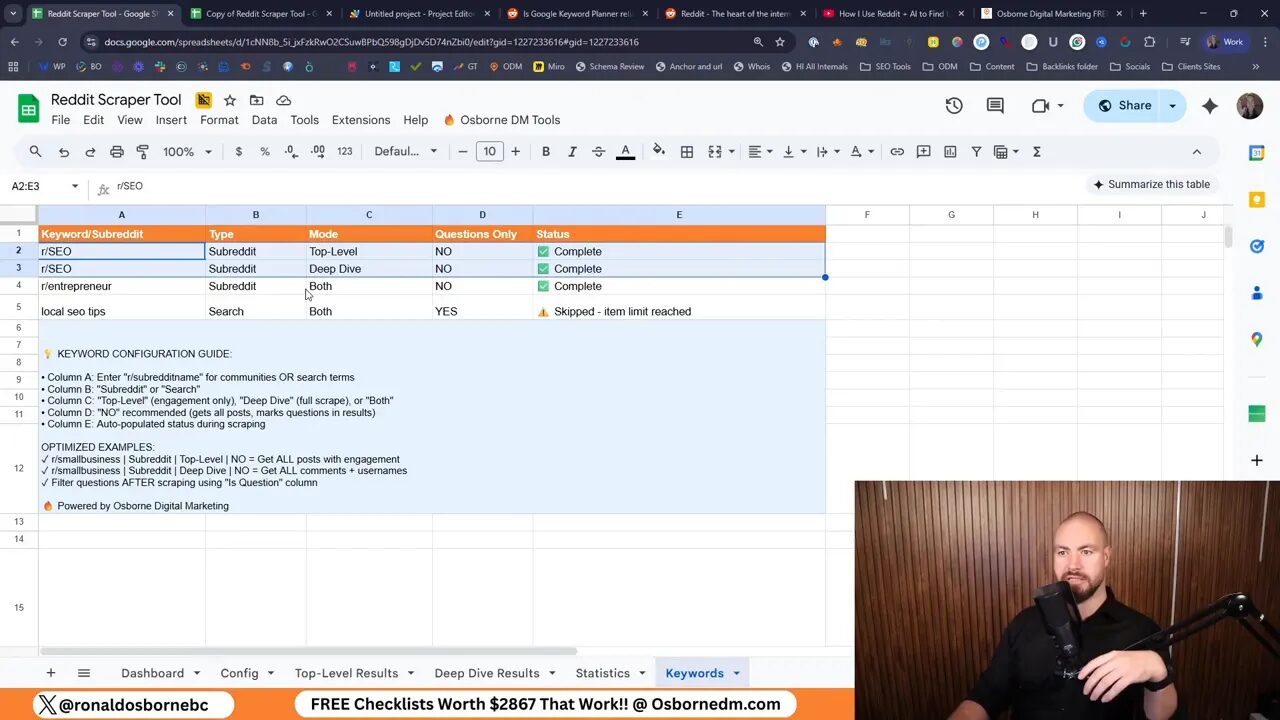

On the Keywords sheet add targets in three columns: type (subreddit or search), the subreddit or keyword, and the mode (top-level or deep dive). For example, add r/SEO as a subreddit type or add "local SEO tips" as a search term. The Reddit Scraper can run both approaches concurrently so you get the best of both worlds.

Running a scrape and what to expect

Open Osborne DM Tools and click Run Scraper. Depending on your limits, scrapes can take anywhere from a few minutes to 20 minutes or more. Watch the Statistics sheet for runtime, item counts, and success rates.

Understanding the results

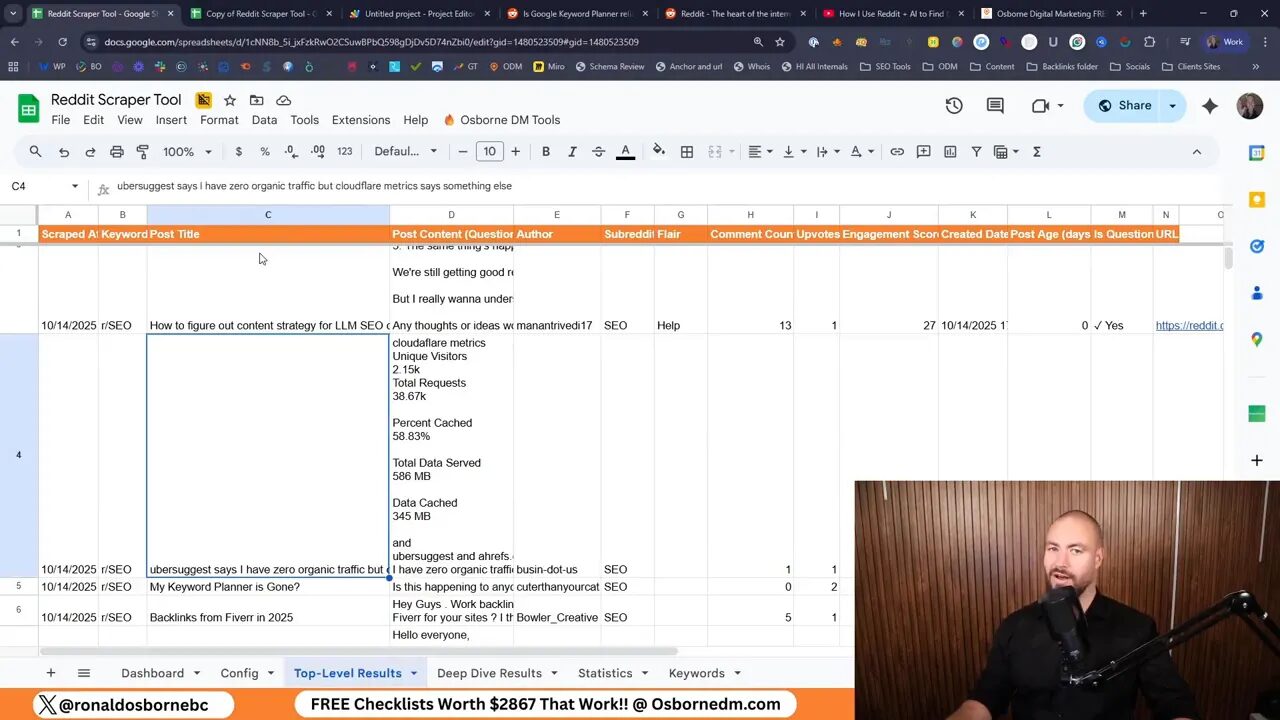

After the scrape completes review two places:

- Top-Level Results: an overview list of posts and meta data that is perfect for content idea discovery and quick filtering.

- Deep-Dive Results: full posts and comments with author handles, timestamps, scores, and the conversation context you need for outreach or sentiment analysis.

The sheet also calculates an engagement score weighted by comments and upvotes. Use that to prioritize threads that deserve your attention first.

Using scraped data for content and leads

Use the Reddit Scraper to discover real questions you can answer with blog posts, videos, or social content. For lead generation, export the deep-dive user data and reach out to active contributors who demonstrate intent or need. The tool gives you the live URL to the post; from there you can visit the thread and engage respectfully.

Pros, cons, and tips

- Pros: free, official API integration, no external servers, dual-mode scanning, and ready-to-use sheets.

- Cons: you need Reddit API credentials, rate limits apply, and Apps Script runtime limits mean very large scrapes need batching. The sheet does not provide direct profile URLs for every user, though it includes post live links.

Tips:

- Keep thresholds low to find fresh posts, then sort results by engagement score.

- Run smaller batches for faster completion and to stay under API and script limits.

- Use question detection to focus on problem-focused threads for content ideas and outreach.

Credit and where to get help

I built this sheet from inspiration found on GitHub and turned it into a friendly Google Sheets tool. If you want help setting it up or running an SEO campaign using the output, join the community group or book a discovery call.

Final thoughts

The Reddit Scraper gives you a low-friction way to turn real Reddit discussions into content ideas and lead opportunities. Make a copy, set your API, tweak the config, and let the sheet surface the conversations your customers are having right now. Connect with Ronald Osborne the marketing and consulting expert today!